Guess what'll happen with tons of LLM-Output in the comming generation of LLM input data!

- replies

- 2

- announces

- 1

- likes

- 10

@dat @nonfedimemes Yeah! as far as I could investigate this issue, it seems like this specific model, when not instructed with any prompt, will just look into its latent space and accidentally trick itself into thinking it's a 1997 chatbot lmao

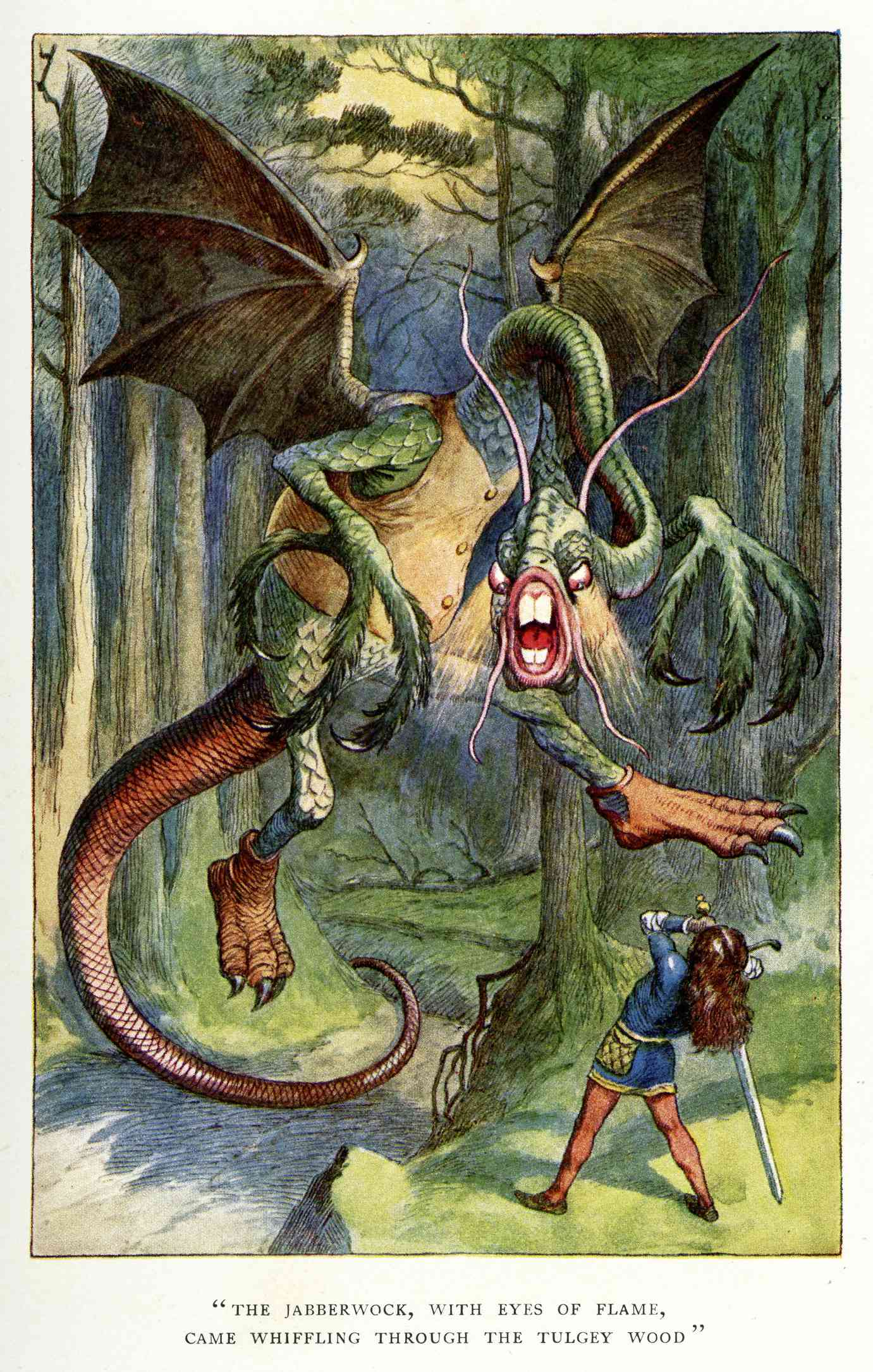

@nonfedimemes They need the Vorpal blade,of course.

Sorry,i mean the Burpal Blade.

@nonfedimemes Brillig!

@dat @nonfedimemes For some reason, LLMs also seem to love Russian propaganda.

@nonfedimemes Don't ask me why, but A. I love this & B. This is the one AI I'd be willing to use.

@nonfedimemes T'was brillig and the vibey codes

Did gyre and gimbal in the wait.

How do they mean 'manifesting' here?

Just need to find a Vorpal Blade, which is easiest if you're playing a neutral hero.

Snicker-snack!

@nonfedimemes this be jabberwacky according to the interweb

@nelson @dat @nonfedimemes also keep in mind they were using a base model, which doesn't follow instructions or anything, it's pure text autocomplete. Without post-training it doesn't have a concept of a "user" and "assistant". It's probably interpreting the input text as a chat log, so it continues it with more chat log.

These kind of models are very fun to play with, but even more useless than regular (instruct trained) LLMs.

@nonfedimemes Well don't say hi

@starsider @nelson @dat @nonfedimemes "Interprets" can a random string generator interpret anything? "Thinks" is a random string generator somehow alive?

Stop pretending your silly oracle machine is anything but.

@luyin @starsider @dat @nonfedimemes

hashtag ai slop, hashtag owned, hashtag im better than you

i also hate ai, i don't believe ai thinks, i dont think any educated person in this space believes that ai is nothing but an stochastic parrot, using words such as "thinking" is merely for making the language more accessible to people who are not well versed on this topic.

do better.

@luyin @nelson @dat @nonfedimemes What part of my message made you think that I consider LLMs anything other than fancy autocomplete machines? Was it really the word "interpreting"? That's a very common word in the world of programming languages, and nobody considers a code interpeter or compiler "intelligent" or that "thinks" in any way related to sentience.

Note how I called regular LLMs "useless" in my previous message. An AI evangelist would never say that.

Please don't be so obsessed about words without looking into the context. I said many times that a computer "is thinking" with the meaning of "it's taking its time to compute something", many years before all this genAI craze. But it's the same with LLMs. When I say that it "thinks" I don't really think it's thinking in any way that resembles a sentient being: they're not capable of learning through experience, they just parrot whatever tokens are more likely in its statistic model given a previous input.